Sync

makes apps awesome

Sync is the magic behind fast apps and the key to collaborative AI

makes apps awesome

Sync is the magic behind fast apps and the key to collaborative AI

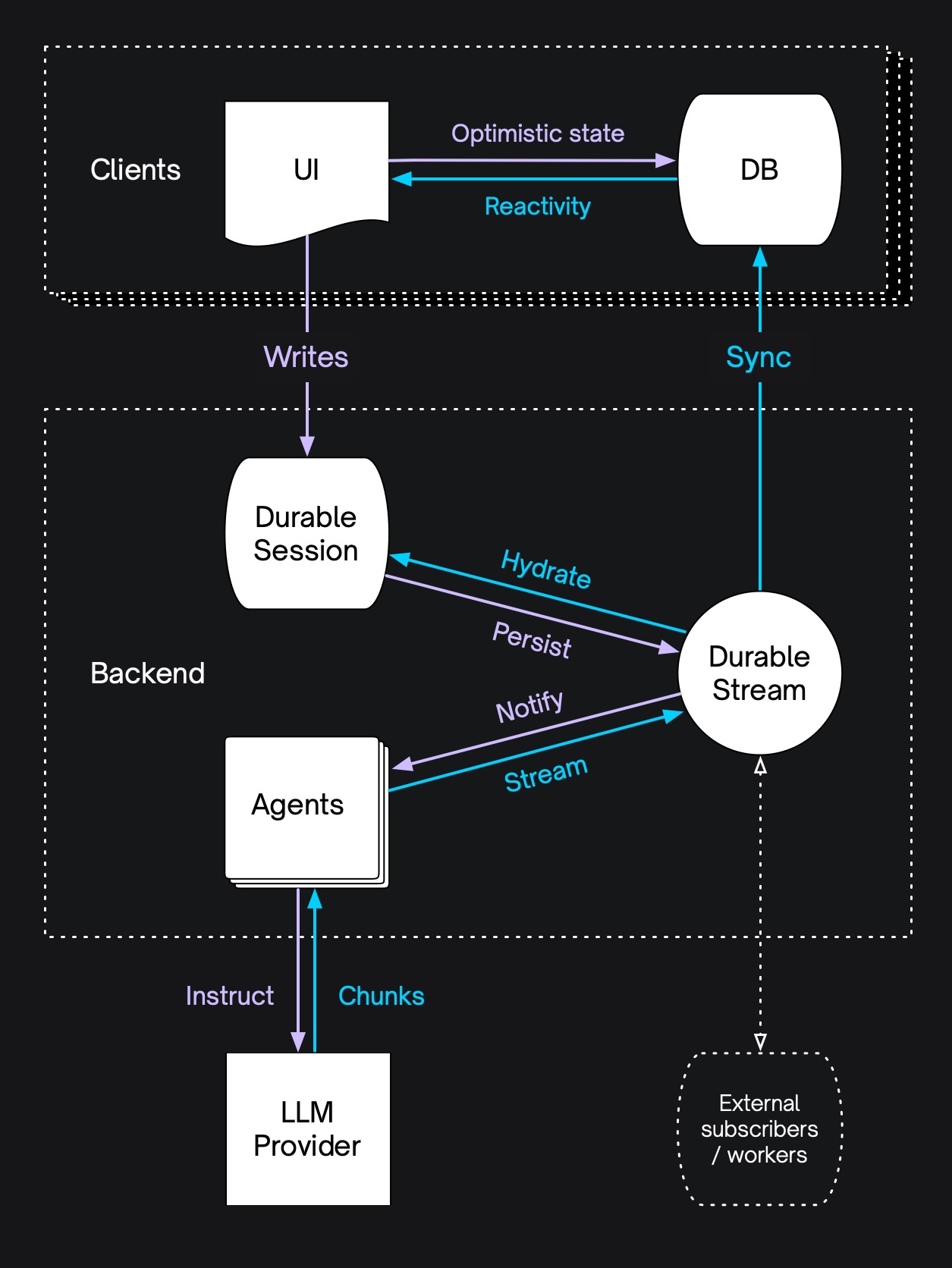

Sync makes your apps super-fast, with end-to-end reactivity, resilience and built-in multi-user collaboration.

Build fast, modern apps like Figma and Linear. With sub-millisecond reactivity and instant local writes.

Build apps that work reliably, even with patchy connectivity. With resilient transport that ensures data is never lost.

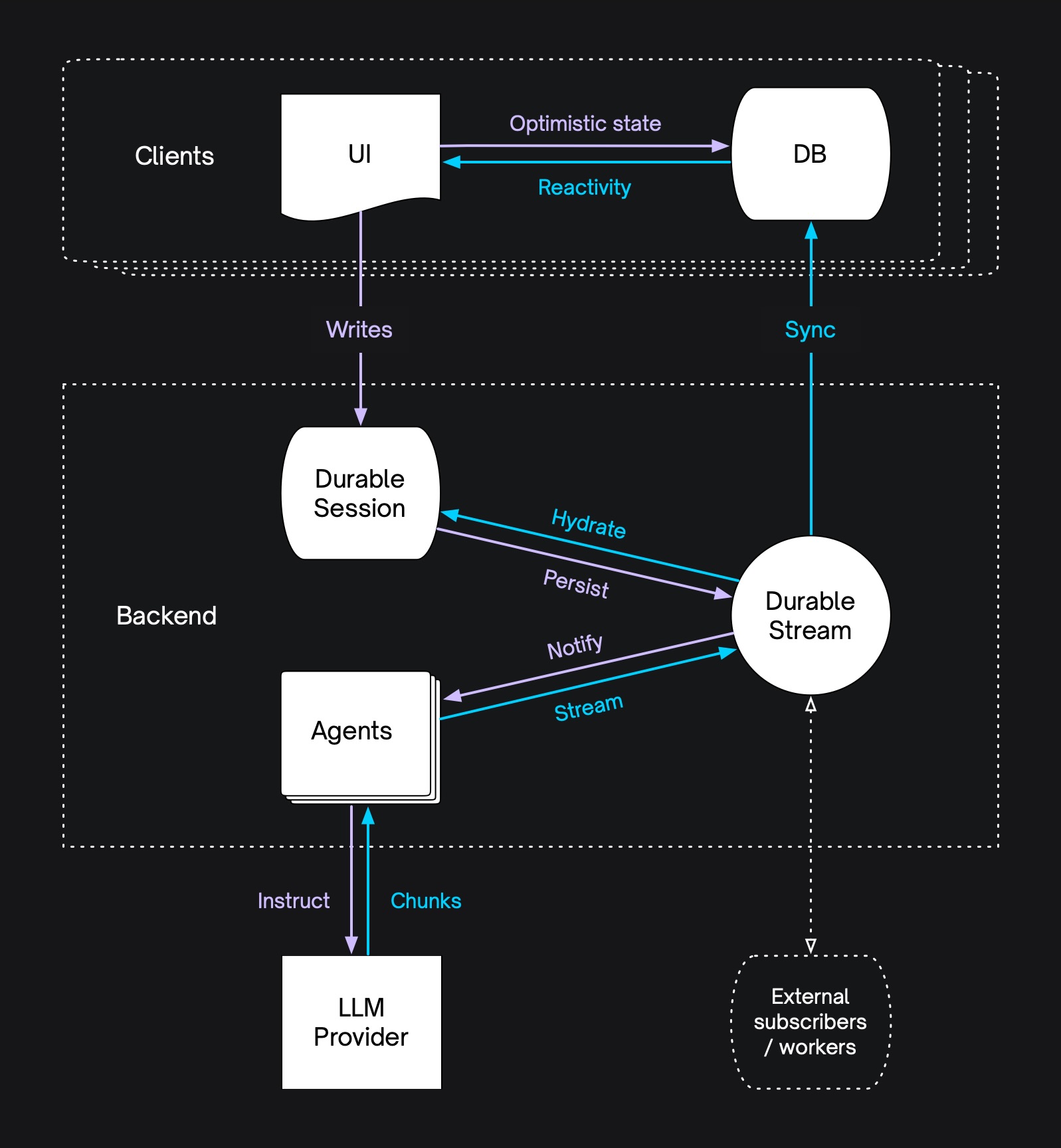

Build multi-user, multi-agent apps that naturally support both real-time and asynchronous collaboration.

Build multi-step agentic workflows that resume after failures. With agents and workers syncing and resuming from durable state.

As the world moves to getting things done through agents, the winners are the products that combine AI with team-based collaboration.

AI apps and agentic systems built on a single-user <> single-agent, request <> response paradigm don't cut it.

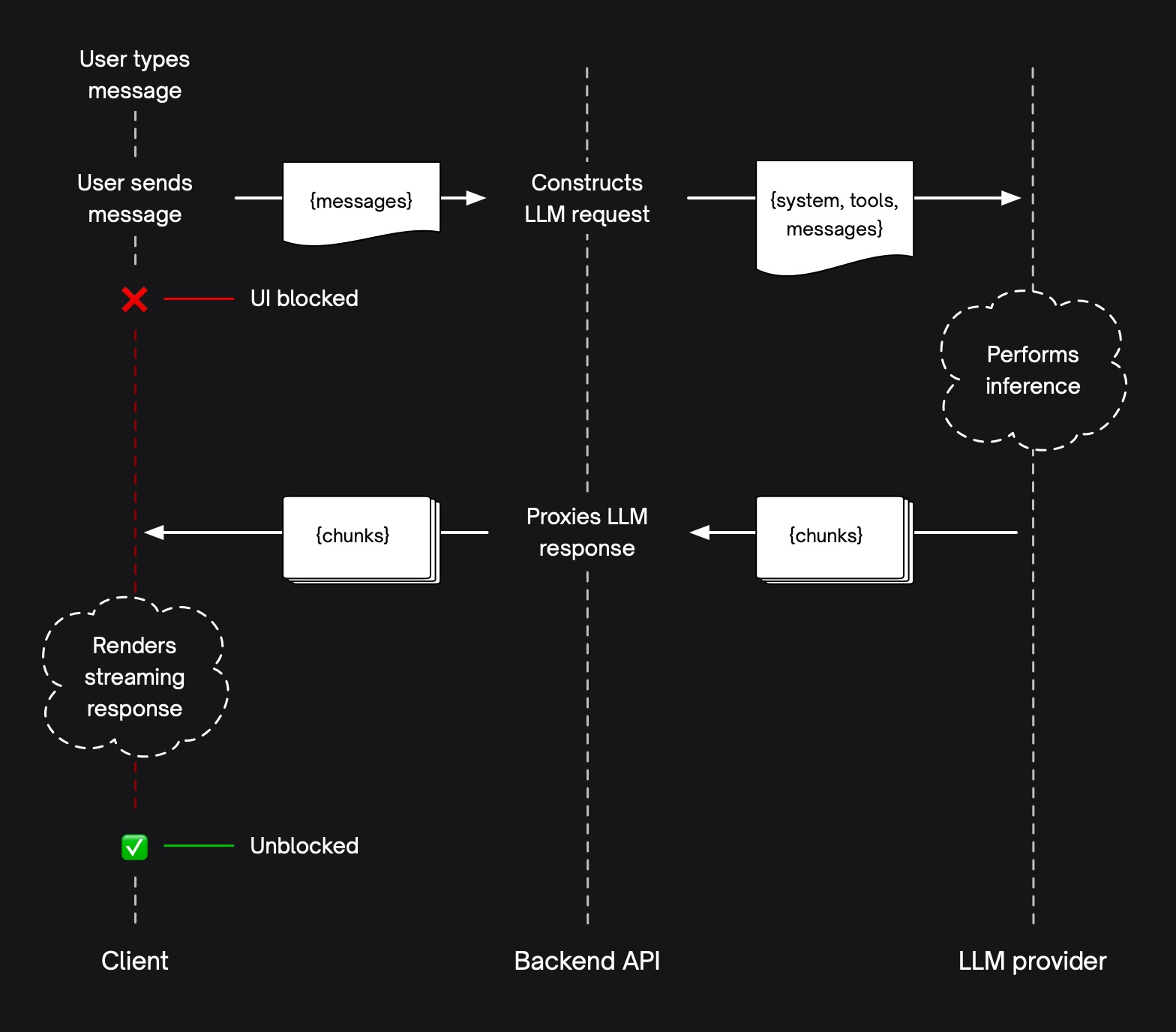

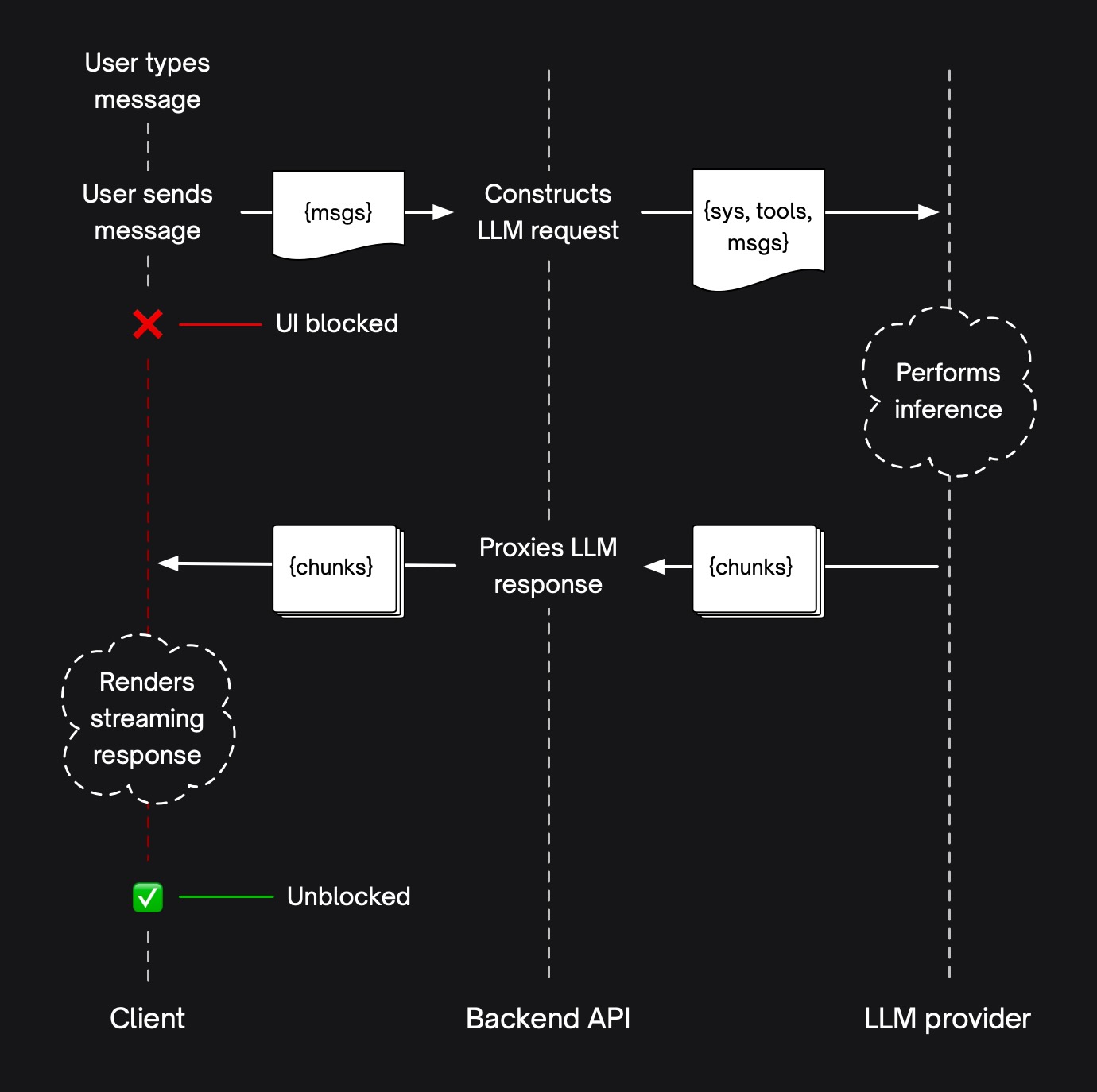

Requests are fragile and hard to resume. The UI blocks while they stream back. Local state isn't shared.

It's hard to support either the real-time or async collaboration that are key to product-led-growth and enterprise adoption.

With sync, state is persistent, addressable and shared. You get multi-tab, multi-device, multi-user and multi-agent built in.

You unlock product-led growth and can weave your product into your customers' workflows and governance stuctures.

Start with the Quickstart. Dive deeper with the Docs and Demos.

Built into tools like Firebase and Supabase. Used by products like Trigger and Otto.